Done and Dusted: 5 Things About Data Cleaning You Probably Didn’t Know

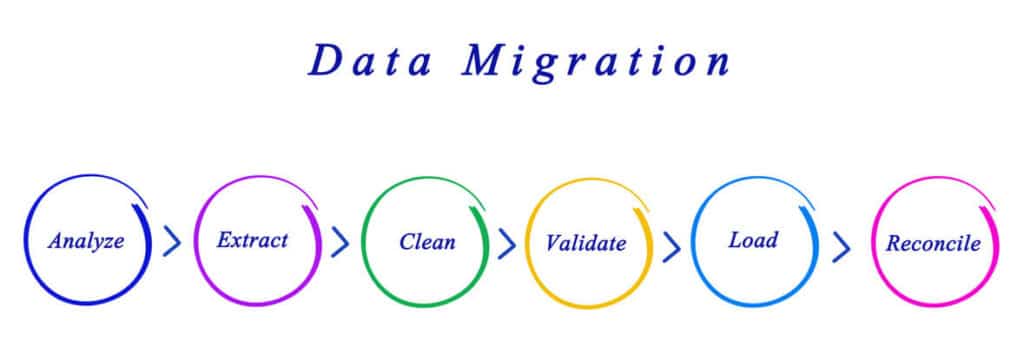

One of the most important parts about a migration into Salesforce is getting legacy data into the system. Unfortunately, this process can become one of the lengthiest and most dangerous parts of an implementation. The reason for this is generally that an implementation specialist has to become intimately aware of the many corners of the legacy data. They not only need to know what field maps to what object but also that there is consistent and valid data that is being inserted in the correct way and at the right time.

One of the most important parts about a migration into Salesforce is getting legacy data into the system. Unfortunately, this process can become one of the lengthiest and most dangerous parts of an implementation. The reason for this is generally that an implementation specialist has to become intimately aware of the many corners of the legacy data. They not only need to know what field maps to what object but also that there is consistent and valid data that is being inserted in the correct way and at the right time.

In order to stay organized and ensure that only high quality data enters the system for the initial data load, a specialist will go through the long and arduous process of verifying and cleaning data when necessary. The following are five common elements of this process. All of these things can be completed by anyone in the organization, but need to be done well and thoroughly to ensure that a data migration goes smoothly and without losing any integrity.

Raw Data Fixes

Raw data can come in all shapes and sizes. Legacy systems can spit out everything from .html to .xml and even then, the resulting data may be in a format that does not play well with other tools. OpFocus has seen cases where serial numbers get translated incorrectly by spreadsheet programs because of errant “E’s”. We’ve developed solutions to modify and translate .xml files, remove incorrectly positioned commas, split up files based on different criteria, and change text encoding. Depending on the situation, different techniques and tools need to be used to translate data sets to Salesforce compliant .csv files.

Takeaway: The resulting raw data file needs to be a UTF-8 Encoded Comma Separated Values file without special characters.

Object Specific Breakouts

Once the raw data is translated into something that is manageable and will go into Salesforce correctly, the next step is to breakout the data into object specific files that have the correct data readily accessible. This means replicating the raw file and organizing the columns in such a way that the fields that need to be mapped into each applicable object are in the first few columns. Sometimes it is easiest to also make sure that the same data types are paired together in order to ensure that the correct formatting gets completed down the line. In general all of the other fields that aren’t being mapped into Salesforce are still kept in tow. This allows us to go back to the success and error files if we ever need to make changes or add in data that was not included initially.

Takeaway: For each object that has data going into it, there should be a separate .csv file with all of the raw data, but different organizations of columns so the most pertinent data comes first.

Standard Field Validation

Sometimes standard fields are required during import. We can’t turn off these requirements for the data migration period, so we must make sure that our data is valid for these fields. For example: Leads require a last name and Opportunities require a name and a stage. In some cases we need to either extrapolate this data from different sources, or take buy-in from the client. The larger the data set, the more this tends to be necessary and can take highly contextual solutions to solve.

Takeaway: Data should be as complete as possible, but in some cases it is required to be complete. Contextually going through the raw data files and making sure everything is where it needs to be will save on errors during the upload process.

Picklist Value Alignment

So much time is spent trying to organize and limit picklist values so that users wont be inundated in options and will thus be more likely to input good data. The benefit of this is better reporting and ultimately better business decisions. The issue, however, is that during a data migration we can upload legacy values into these lists and muddy the waters from the get go. To stop this from happening, wherever a picklist is being mapped to, it is important to go through and translate the raw values into the real values that were put into the system. By doing this, a Salesforce Org can, almost immediately, have clean and reportable data from the first day on, rather than having confusing legacy data that will stick around for years.

Takeaway: Picklist values help us bucket information and can promote clean databases. If these are kept clean to begin with, the database will uphold a higher standard into the future.

Data Formatting Tune Ups

There are some data types that require a specific format before being loaded into Salesforce. These include Booleans, Date, Date & Time and Emails. Catching errors in these data types before they are inserted into Salesforce is important because it will effect how much has to be done to keep records of all data exercises completed (the ultimate goal is to have one compiled success file with all data that was inserted alongside the new Salesforce ID). In general:

- Booleans: [yes, y, true, on, 1] or [no, n, false, off, 0]

- Date: yyyy-mm-dd

- Date & Time: yyyy-mm-ddThh:mm:ss.SSSZ

- Emails: example@blank.com

These aren’t the only formatting options for each of these data types, but in general these will suffice for inserting data.

Takeaway: If the necessary data types are cleaned up before importing data, less errors will need to be handled after the fact.

Inserting legacy data is a very important, albeit somewhat tedious function for Salesforce administrators. We have found that over time these exercises become muscle memory and your data import skills will improve with experience. Have you found this to be true? What are some other data cleansing exercises? Leave us a comment below!